Published on:

15 Jul 2023

6

min read

On #robot judges: part 1.

The Business Times has published an opinion piece,¹ in which the author suggests that there are "good reasons" to "embrace legally binding and enforceable #AI-generated reasoned determinations".

I disagree.²

Before I address the author's arguments, let's remind ourselves what exactly an AI Large Language Model (#LLM) is. Essentially, it is a very powerful predictive text generator.³

But what does this really mean?

I'll demonstrate.

---

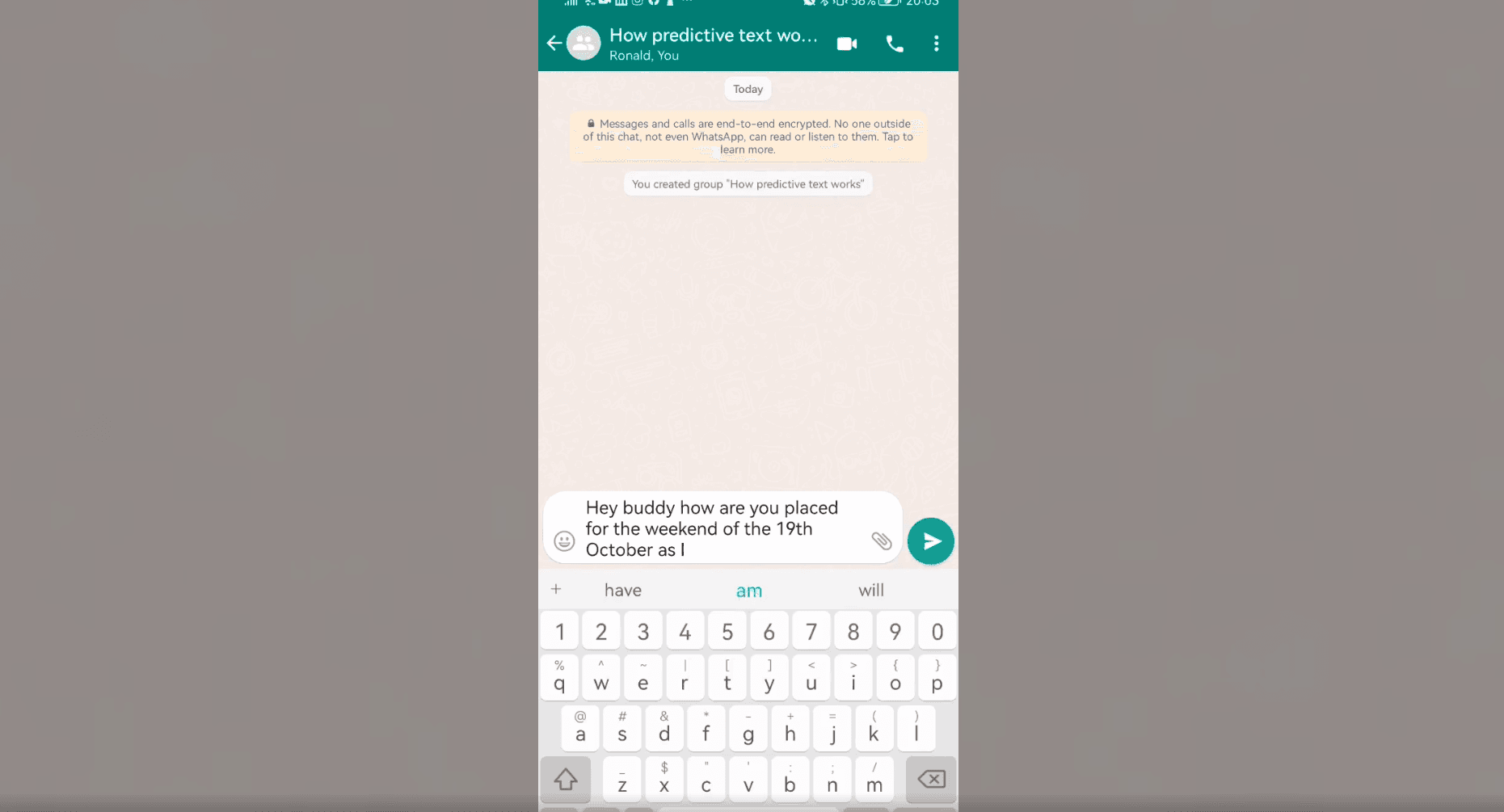

Do me a favour. Take out your smartphone.⁴ Open your favourite messaging app. Find a contact who's not going to judge you if they receive a weird message from you.

In the text box, type "Hey", followed by a space.

Chances are, you have a predictive keyboard installed. If so, you'll see suggested words pop up above your keyboard. And if you tap on one of those words, it will be inserted into the text box, after the last word.

And...

Actually, you know what? If a picture is worth a thousand words, a video must be worth millions.

So help me out here - watch the embedded video, then come back to this post.

---

Now, LLMs like #ChatGPT operate similarly to predictive text (as you just saw), but with a few differences:

1) Different inputs.

Think of the initial "Hey" you input into a predictive keyboard as the equivalent of the prompt you feed into an LLM. So in the example we just saw, the input is the word "Hey", and the predictive keyboard suggested the next word after "Hey", then the next word after "buddy", and so forth.

Conversely, for an LLM like ChatGPT, your input is the prompt. So for example, if your prompt is "Can LLMs reason?", then the LLM predicts words in response to that input.

2) Different sources of predictions.

A predictive keyboard predicts the next word based on my typing habits. My predictive keyboard thinks that the "best" word following my "Hey" is "buddy".⁵ Your predictive keyboard may think that the best word following your "Hey" is "folks", "bro", "babe", etc.

Conversely, LLMs predict the next word via crowdsourcing, so as to speak - huge volumes of data are fed into LLMs, and the LLM decides, based on the data and its training, what are the "best" words to predict in response to your prompt.

3) Different outputs.

A predictive keyboard will only predict one or two words at a time, based on the previous word. Also, you have to manually select, from the predicted words, the next word, after which the next set of predicted words is generated.

Conversely, LLMs generate entire sentences or paragraphs in response to prompts. And they decide, for you, which of the potential predicted words / phrases / sentences are the "best" response to the prompt (and perhaps the preceding words).

---

So what does this have to do with robot judges?

Well, we'll get there - eventually.

In part 2, we'll explore whether LLMs are able to reason, and why it matters.

Disclaimer:

The content of this article is intended for informational and educational purposes only and does not constitute legal advice.

H/t to Ronald JJ Wong for drawing my attention to the opinion piece, and putting up with my slightly unhinged ranting.

¹ https://www.singaporelawwatch.sg/Headlines/robot-judges-not-a-question-of-legitimacy-but-of-choice-opinion.

² Based on the present state of AI.

³ https://openai.com/blog/chatgpt. But some may find this assertion controversial; e.g.: https://www.lesswrong.com/posts/sbaQv8zmRncpmLNKv/the-idea-that-chatgpt-is-simply-predicting-the-next-word-is.

For the avoidance of doubt, I am not suggesting that LLMs generate output word by word, based only on the previous word. The length and sophistication of the output does suggest that LLMs receive, as input, the prompt as a whole, then generate what is a likely answer to that prompt – and the generation process may be phrase by phrase, sentence by sentence, or even paragraph by paragraph.

Also, if you disagree with this assertion, can I please request that you finish reading the post before leaving an angry comment?

⁴ Assuming it’s not already in your hands.

⁵ This means, of course, that “buddy” is my most used term of address when I text. Huh.